Introduction

Hugging Face’s Generative AI Services (HUGS) make deploying and managing LLMs easier and faster. Now, with DigitalOcean’s 1-Click deployment for HUGS on GPU droplets, you can set up, scale, and optimize LLMs on a cloud infrastructure tailored for high performance. This guide walks you through deploying HUGS on a DigitalOcean GPU droplet, integrating it with Open WebUI, and explains why this setup is ideal for seamless, scalable LLM inference.

Prerequisites

- A DigitalOcean account with access to GPU droplets

- Familiarity with SSH and basic Docker commands

- An SSH key for logging into your droplet

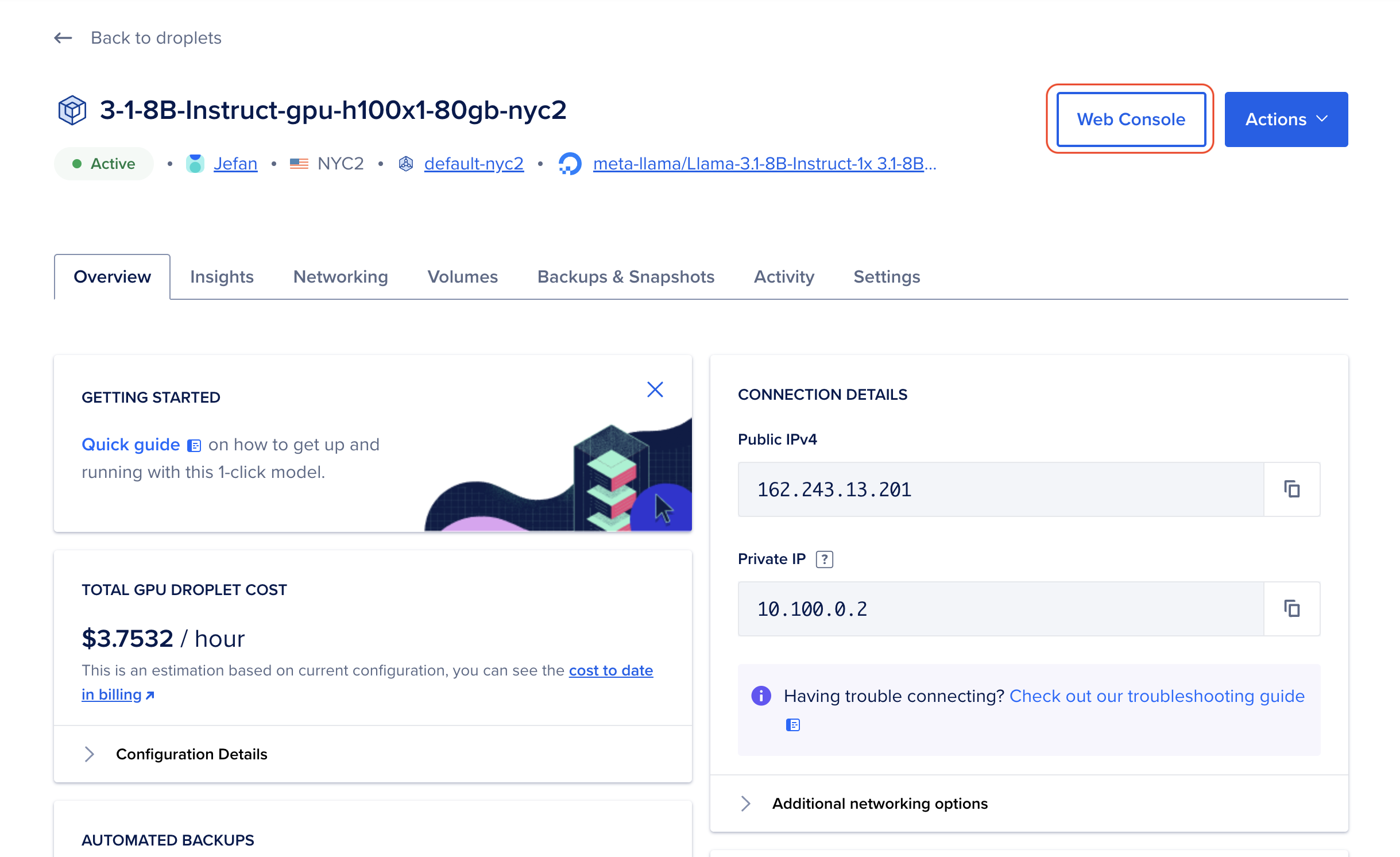

Step 1: Create and Access Your GPU Droplet

-

Set up the Droplet:

Go to DigitalOcean’s Droplets page and create a new GPU droplet using the Hugging Face HUGS 1-Click Deployment option. -

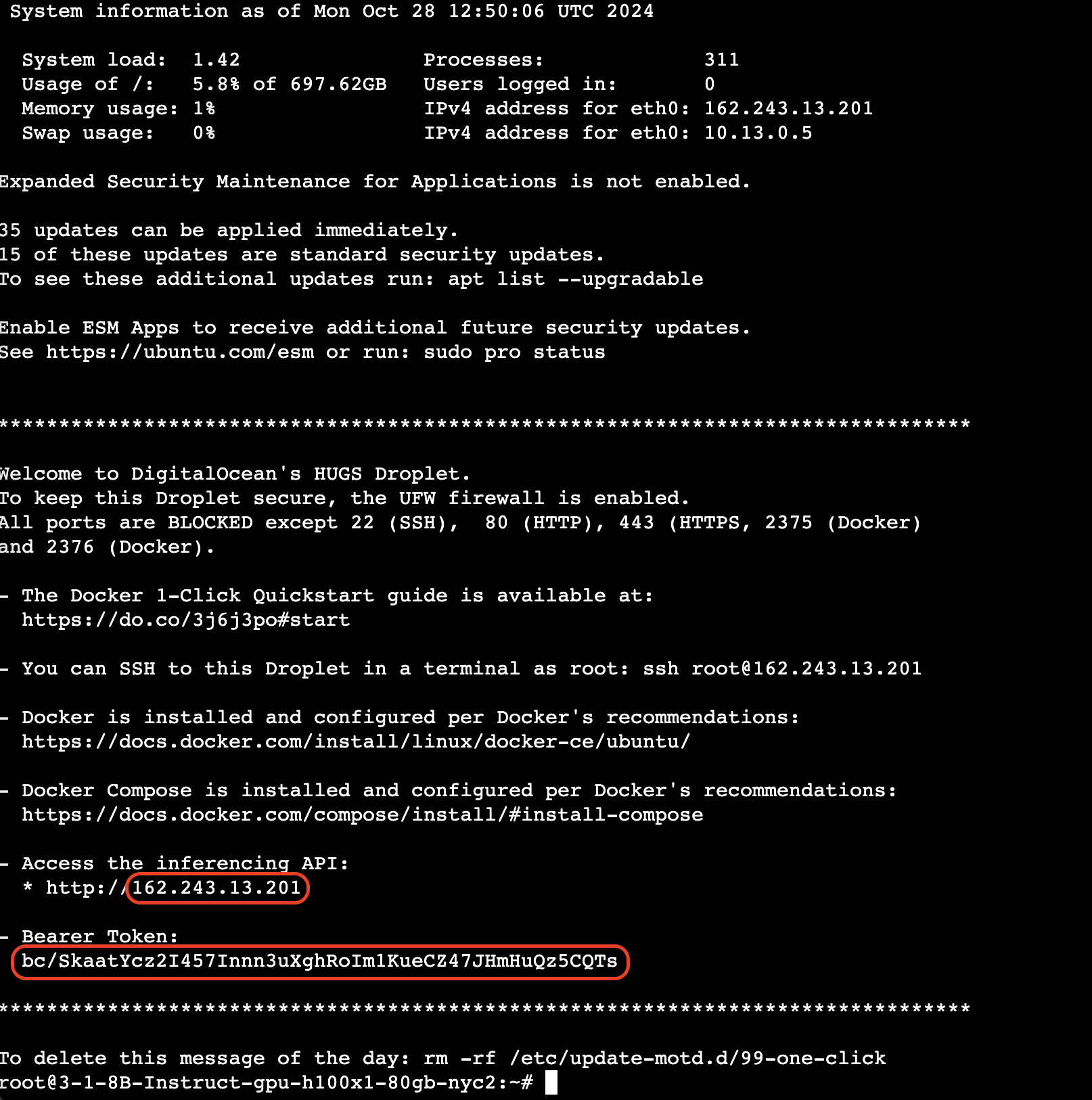

Access the Console:

Once your droplet is ready, click on its name in the Droplets section and select Launch Web Console. -

Make a note of the Message of the Day (MOTD): This contains the bearer token and inference endpoint for API access—you’ll need this later.

Step 2: Start Hugging Face HUGS

Hugging Face HUGS will automatically start after droplet setup. To verify, check the status of the Caddy service managing the inference API:

sudo systemctl status caddy

bashAllow 5-10 minutes for the model to fully load. Planned future updates aim to reduce this warm-up time.

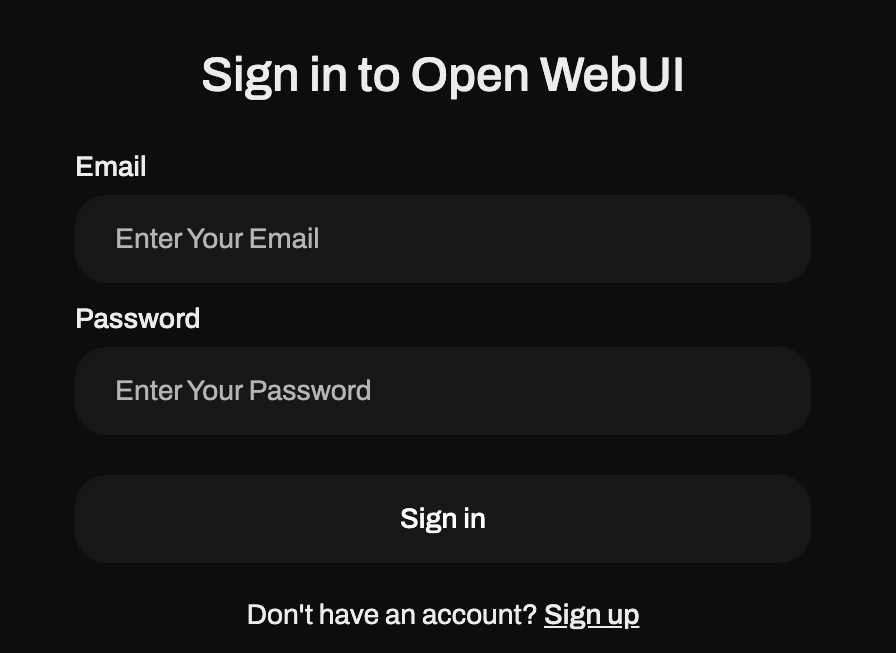

Step 3: Start Open WebUI

Launch Open WebUI using Docker on another droplet:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

bashOnce Open WebUI is running, access it at http://<your_droplet_ip>:3000.

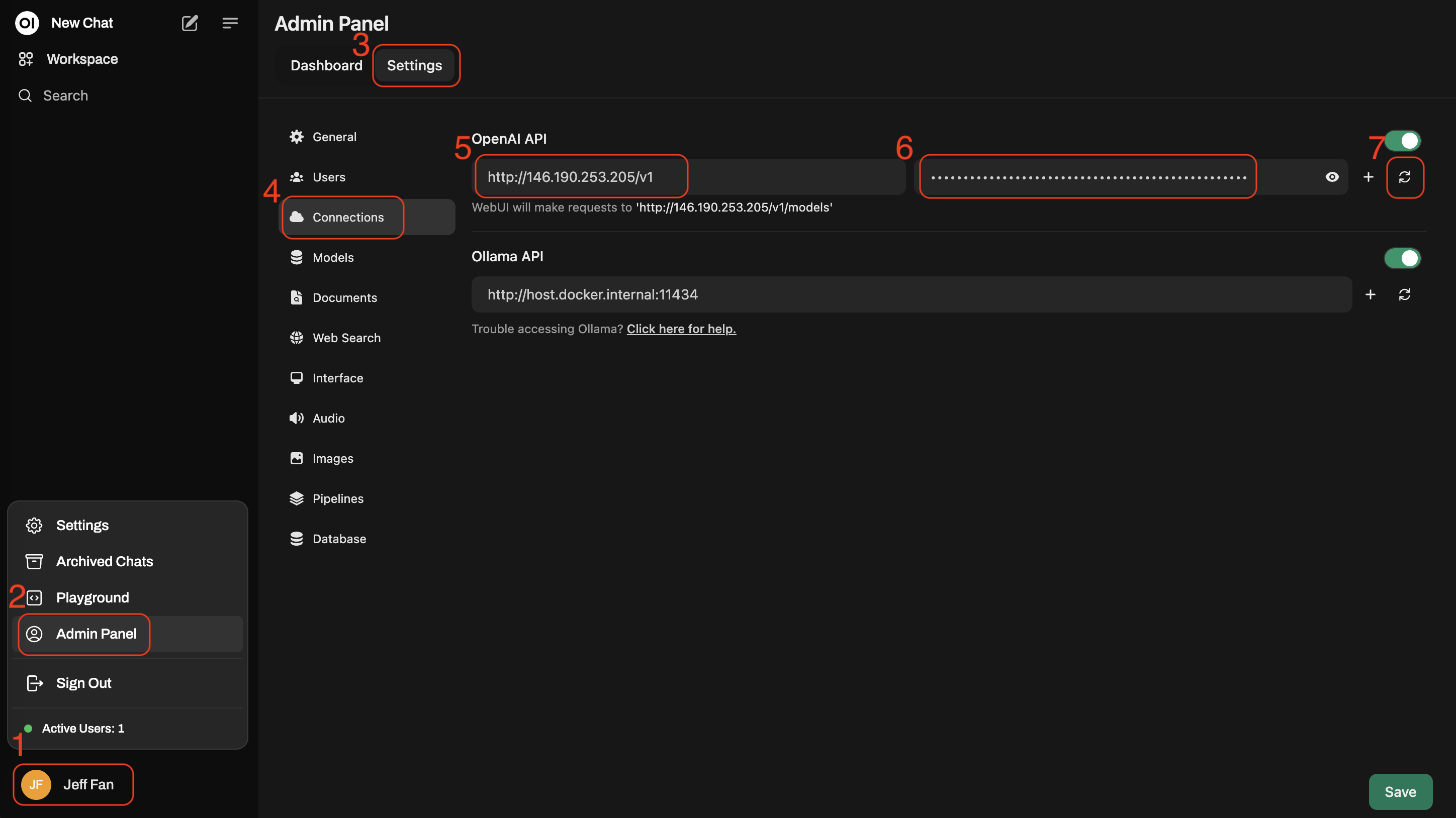

Step 4: Integrate HUGS with Open WebUI

To connect Open WebUI with Hugging Face HUGS:

-

Open Settings:

- In Open WebUI, click your user icon at the bottom left, then click Settings.

-

Go to Admin:

- Navigate to the Admin tab, then select Connections.

-

Set the Inference Endpoint:

- In the API link field, enter your droplet’s IP followed by

/v1. If a specific port is required, include it, e.g.,http://<your_droplet_ip>/v1. - Use the API token from the MOTD for authentication.

- In the API link field, enter your droplet’s IP followed by

-

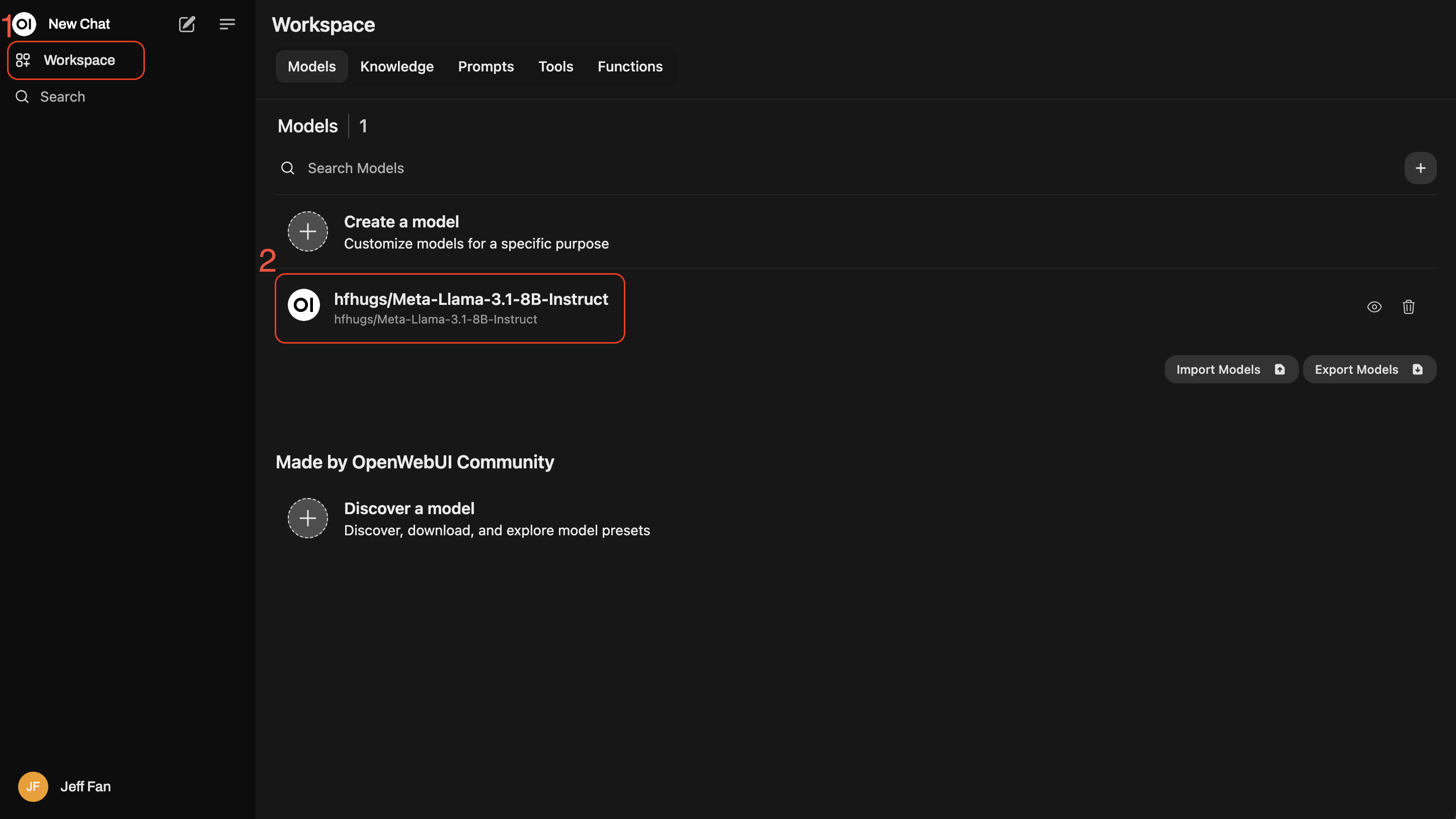

Verify Connection:

- Click Verify Connection. A green light confirms a successful connection. Open WebUI will then auto-detect available models, such as

hfhgus/Meta-Llama.

- Click Verify Connection. A green light confirms a successful connection. Open WebUI will then auto-detect available models, such as

Step 5: Start Chatting with the Model

With HUGS integrated into Open WebUI, you’re ready to interact with your LLM:

- Ask questions like “What is DigitalOcean?”

- Monitor requests logs from container while asking a follow-up question:

Does DigitalOcean offer object storage?:

sudo docker ps

sudo docker logs <your-container-ID> -f

bashConclusions

With HUGS deployed on DigitalOcean’s GPU droplet and Open WebUI, you can efficiently manage, scale, and optimize LLM inference. This setup eliminates hardware optimization concerns and provides a ready-to-scale solution for delivering fast, reliable responses across multiple regions.

Why Choose HUGS on DigitalOcean GPU Droplets?

-

Ease of Deployment and Simplified Management

Deploying HUGS with DigitalOcean’s one-click setup is straightforward. No need for manual configurations—DigitalOcean and Hugging Face handle the backend, allowing you to focus on scaling. -

Optimized Performance for Large-Scale Inference HUGS on DigitalOcean GPUs ensures optimal performance, running LLMs efficiently on GPU hardware without manual tuning.

-

Scalability and Flexibility DigitalOcean’s infrastructure supports scalable deployments with load balancers for high availability, letting you serve users globally with low latency.

By using Hugging Face HUGS on DigitalOcean GPU droplets, you not only benefit from high-performance LLM inference but also gain the flexibility to scale and manage the deployment effortlessly. This combination of optimized hardware, scalability, and simplicity makes DigitalOcean an excellent choice for production-level AI workloads.